AI-100 Exam Questions - Online Test

AI-100 Premium VCE File

150 Lectures, 20 Hours

Proper study guides for Abreast of the times Microsoft Designing and Implementing an Azure AI Solution certified begins with Microsoft AI-100 preparation products which designed to deliver the Accurate AI-100 questions by making you pass the AI-100 test at your first time. Try the free AI-100 demo right now.

Check AI-100 free dumps before getting the full version:

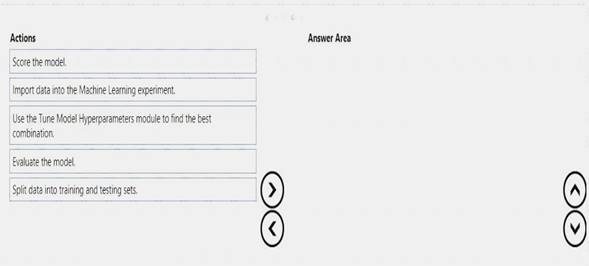

NEW QUESTION 1

You need to build a pipeline for an Azure Machine Learning experiment.

In which order should you perform the actions? To answer, move all actions from the list of actions to the answer area and arrange them in the correct order.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

References:

https://azure.microsoft.com/en-in/blog/experimentation-using-azure-machine-learning/ https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/machine-learning-modules

NEW QUESTION 2

You are designing an AI solution that will provide feedback to teachers who train students over the Internet. The students will be in classrooms located in remote areas. The solution will capture video and audio data of the students in the classrooms.

You need to recommend Azure Cognitive Services for the AI solution to meet the following requirements: Alert teachers if a student seems angry or distracted.

Identify each student in the classrooms for attendance purposes.

Allow the teachers to log the text of conversations between themselves and the students. Which Cognitive Services should you recommend?

- A. Computer Vision, Text Analytics, and Face API

- B. Video Indexer, Face API, and Text Analytics

- C. Computer Vision, Speech to Text, and Text Analytics

- D. Text Analytics, QnA Maker, and Computer Vision

- E. Video Indexer, Speech to Text, and Face API

Answer: E

Explanation:

Azure Video Indexer is a cloud application built on Azure Media Analytics, Azure Search, Cognitive Services (such as the Face API, Microsoft Translator, the Computer Vision API, and Custom Speech Service). It enables you to extract the insights from your videos using Video Indexer video and audio models.

Face API enables you to search, identify, and match faces in your private repository of up to 1 million people. The Face API now integrates emotion recognition, returning the confidence across a set of emotions for each face in the image such as anger, contempt, disgust, fear, happiness, neutral, sadness, and surprise. These emotions are understood to be cross-culturally and universally communicated with particular facial expressions.

Speech-to-text from Azure Speech Services, also known as speech-to-text, enables real-time transcription of audio streams into text that your applications, tools, or devices can consume, display, and take action on as command input. This service is powered by the same recognition technology that Microsoft uses for Cortana and Office products, and works seamlessly with the translation and text-to-speech.

NEW QUESTION 3

You are designing an AI solution in Azure that will perform image classification.

You need to identify which processing platform will provide you with the ability to update the logic over time. The solution must have the lowest latency for inferencing without having to batch.

Which compute target should you identify?

- A. graphics processing units (GPUs)

- B. field-programmable gate arrays (FPGAs)

- C. central processing units (CPUs)

- D. application-specific integrated circuits (ASICs)

Answer: B

Explanation:

FPGAs, such as those available on Azure, provide performance close to ASICs. They are also flexible and reconfigurable over time, to implement new logic.

NEW QUESTION 4

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are deploying an Azure Machine Learning model to an Azure Kubernetes Service (AKS) container. You need to monitor the accuracy of each run of the model.

Solution: You modify the scoring file. Does this meet the goal?

- A. Yes

- B. No

Answer: B

NEW QUESTION 5

You need to deploy cognitive search. You provision an Azure Search service. What should you do next?

- A. Search by using the .NET SDK.

- B. Load data.

- C. Search by using the REST API.

- D. Create an index.

Answer: D

Explanation:

You create a data source, a skillset, and an index. These three components become part of an indexer that pulls each piece together into a single multi-phased operation.

Note: At the start of the pipeline, you have unstructured text or non-text content (such as image and scanned document JPEG files). Data must exist in an Azure data storage service that can be accessed by an indexer.

Indexers can "crack" source documents to extract text from source data. References:

https://docs.microsoft.com/en-us/azure/search/cognitive-search-tutorial-blob

NEW QUESTION 6

You plan to build an application that will perform predictive analytics. Users will be able to consume the application data by using Microsoft Power Bl or a custom website.

You need to ensure that you can audit application usage. Which auditing solution should you use?

- A. Azure Storage Analytics

- B. Azure Application Insights

- C. Azure diagnostic logs

- D. Azure Active Directory (Azure AD) reporting

Answer: D

Explanation:

References:

https://docs.microsoft.com/en-us/azure/active-directory/reports-monitoring/concept-audit-logs

NEW QUESTION 7

You have an Azure Machine Learning experiment that must comply with GDPR regulations. You need to track compliance of the experiment and store documentation about the experiment. What should you use?

- A. Azure Table storage

- B. Azure Security Center

- C. an Azure Log Analytics workspace

- D. Compliance Manager

Answer: D

Explanation:

References:

https://azure.microsoft.com/en-us/blog/new-capabilities-to-enable-robust-gdpr-compliance/

NEW QUESTION 8

You have an Al application that uses keys in Azure Key Vault.

Recently, a key used by the application was deleted accidentally and was unrecoverable. You need to ensure that if a key is deleted, it is retained in the key vault for 90 days. Which two features should you configure? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point

- A. the expiration date on the keys

- B. soft delete

- C. purge protection

- D. auditors

- E. the activation date on the keys

Answer: BC

Explanation:

References:

https://docs.microsoft.com/en-us/azure/architecture/best-practices/data-partitioning

NEW QUESTION 9

You are developing a mobile application that will perform optical character recognition (OCR) from photos. The application will annotate the photos by using metadata, store the photos in Azure Blob storage, and then score the photos by using an Azure Machine Learning model.

What should you use to process the data?

- A. Azure Event Hubs

- B. Azure Functions

- C. Azure Stream Analytics

- D. Azure Logic Apps

Answer: A

NEW QUESTION 10

Your company has a data team of Transact-SQL experts.

You plan to ingest data from multiple sources into Azure Event Hubs.

You need to recommend which technology the data team should use to move and query data from Event Hubs to Azure Storage. The solution must leverage the data team’s existing skills.

What is the best recommendation to achieve the goal? More than one answer choice may achieve the goal.

- A. Azure Notification Hubs

- B. Azure Event Grid

- C. Apache Kafka streams

- D. Azure Stream Analytics

Answer: B

Explanation:

Event Hubs Capture is the easiest way to automatically deliver streamed data in Event Hubs to an Azure Blob storage or Azure Data Lake store. You can subsequently process and deliver the data to any other storage destinations of your choice, such as SQL Data Warehouse or Cosmos DB.

You to capture data from your event hub into a SQL data warehouse by using an Azure function triggered by an event grid.

Example:

First, you create an event hub with the Capture feature enabled and set an Azure blob storage as the destination. Data generated by WindTurbineGenerator is streamed into the event hub and is automatically captured into Azure Storage as Avro files.

Next, you create an Azure Event Grid subscription with the Event Hubs namespace as its source and the Azure Function endpoint as its destination.

Whenever a new Avro file is delivered to the Azure Storage blob by the Event Hubs Capture feature, Event Grid notifies the Azure Function with the blob URI. The Function then migrates data from the blob to a SQL data warehouse.

References:

https://docs.microsoft.com/en-us/azure/event-hubs/store-captured-data-data-warehouse

NEW QUESTION 11

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are developing an application that uses an Azure Kubernetes Service (AKS) cluster. You are troubleshooting a node issue.

You need to connect to an AKS node by using SSH.

Solution: You change the permissions of the AKS resource group, and then you create an SSH connection. Does this meet the goal?

- A. Yes

- B. No

Answer: B

Explanation:

Instead add an SSH key to the node, and then you create an SSH connection.

References:

https://docs.microsoft.com/en-us/azure/aks/ssh

NEW QUESTION 12

You need to design the Butler chatbot solution to meet the technical requirements.

What is the best channel and pricing tier to use? More than one answer choice may achieve the goal Select the BEST answer.

- A. standard channels that use the S1 pricing tier

- B. standard channels that use the Free pricing tier

- C. premium channels that use the Free pricing tier

- D. premium channels that use the S1 pricing tier

Answer: D

Explanation:

References:

https://azure.microsoft.com/en-in/pricing/details/bot-service/

NEW QUESTION 13

You design an AI solution that uses an Azure Stream Analytics job to process data from an Azure IoT hub. The IoT hub receives time series data from thousands of IoT devices at a factory.

The job outputs millions of messages per second. Different applications consume the messages as they are available. The messages must be purged.

You need to choose an output type for the job.

What is the best output type to achieve the goal? More than one answer choice may achieve the goal.

- A. Azure Event Hubs

- B. Azure SQL Database

- C. Azure Blob storage

- D. Azure Cosmos DB

Answer: D

Explanation:

Stream Analytics can target Azure Cosmos DB for JSON output, enabling data archiving and low-latency queries on unstructured JSON data.

References:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-documentdb-output

NEW QUESTION 14

You plan to implement a new data warehouse for a planned AI solution. You have the following information regarding the data warehouse:

•The data files will be available in one week.

•Most queries that will be executed against the data warehouse will be ad-hoc queries.

•The schemas of data files that will be loaded to the data warehouse will change often.

•One month after the planned implementation, the data warehouse will contain 15 TB of data. You need to recommend a database solution to support the planned implementation.

What two solutions should you include in the recommendation? Each correct answer is a complete solution. NOTE: Each correct selection is worth one point.

- A. Apache Hadoop

- B. Apache Spark

- C. a Microsoft Azure SQL database

- D. an Azure virtual machine that runs Microsoft SQL Server

Answer: AB

NEW QUESTION 15

Your company has factories in 10 countries. Each factory contains several thousand IoT devices. The devices present status and trending data on a dashboard.

You need to ingest the data from the IoT devices into a data warehouse.

Which two Microsoft Azure technologies should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- A. Azure Stream Analytics

- B. Azure Data Factory

- C. an Azure HDInsight cluster

- D. Azure Batch

- E. Azure Data Lake

Answer: CE

Explanation:

With Azure Data Lake Store (ADLS) serving as the hyper-scale storage layer and HDInsight serving as the Hadoop-based compute engine services. It can be used for prepping large amounts of data for insertion into a Data Warehouse

References:

https://www.blue-granite.com/blog/azure-data-lake-analytics-holds-a-unique-spot-in-the-modern-dataarchitectur

NEW QUESTION 16

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are deploying an Azure Machine Learning model to an Azure Kubernetes Service (AKS) container. You need to monitor the accuracy of each run of the model.

Solution: You configure Azure Application Insights.

Does this meet the goal?

- A. Yes

- B. No

Answer: A

NEW QUESTION 17

Your company has 1,000 AI developers who are responsible for provisioning environments in Azure. You need to control the type, size, and location of the resources that the developers can provision. What should you use?

- A. Azure Key Vault

- B. Azure service principals

- C. Azure managed identities

- D. Azure Security Center

- E. Azure Policy

Answer: B

Explanation:

When an application needs access to deploy or configure resources through Azure Resource Manager in

Azure Stack, you create a service principal, which is a credential for your application. You can then delegate only the necessary permissions to that service principal.

References:

https://docs.microsoft.com/en-us/azure/azure-stack/azure-stack-create-service-principals

NEW QUESTION 18

You are building an Azure Analysis Services cube for your Al deployment.

The source data for the cube is located in an on premises network in a Microsoft SQL Server database. You need to ensure that the Azure Analysis Services service can access the source data.

What should you deploy to your Azure subscription?

- A. a site-to-site VPN

- B. a network gateway

- C. a data gateway

- D. Azure Data Factory

Answer: C

Explanation:

From April 2021 onward we can use On-premises Data Gateway for Azure Analysis Services. This means you can connect your Tabular Models hosted in Azure Analysis Services to your on-premises data sources through On-premises Data Gateway.

References:

https://biinsight.com/on-premises-data-gateway-for-azure-analysis-services/

NEW QUESTION 19

You need to build an API pipeline that analyzes streaming data. The pipeline will perform the following:  Visual text recognition

Visual text recognition Audio transcription

Audio transcription  Sentiment analysis

Sentiment analysis  Face detection

Face detection

Which Azure Cognitive Services should you use in the pipeline?

- A. Custom Speech Service

- B. Face API

- C. Text Analytics

- D. Video Indexer

Answer: D

Explanation:

Azure Video Indexer is a cloud application built on Azure Media Analytics, Azure Search, Cognitive Services (such as the Face API, Microsoft Translator, the Computer Vision API, and Custom Speech Service). It enables you to extract the insights from your videos using Video Indexer video and audio models described below:

Visual text recognition (OCR): Extracts text that is visually displayed in the video. Audio transcription: Converts speech to text in 12 languages and allows extensions.

Sentiment analysis: Identifies positive, negative, and neutral sentiments from speech and visual text. Face detection: Detects and groups faces appearing in the video.

References:

https://docs.microsoft.com/en-us/azure/media-services/video-indexer/video-indexer-overview

NEW QUESTION 20

You deploy an application that performs sentiment analysis on the data stored in Azure Cosmos DB.

Recently, you loaded a large amount of data to the database. The data was for a customer named Contoso. Ltd. You discover that queries for the Contoso data are slow to complete, and the queries slow the entire

application.

You need to reduce the amount of time it takes for the queries to complete. The solution must minimize costs. What is the best way to achieve the goal? More than one answer choice may achieve the goal. Select the BEST answer.

- A. Change the requests units.

- B. Change the partitioning strategy.

- C. Change the transaction isolation level.

- D. Migrate the data to the Cosmos DB database.

Answer: B

Explanation:

References:

https://docs.microsoft.com/en-us/azure/architecture/best-practices/data-partitioning

NEW QUESTION 21

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are deploying an Azure Machine Learning model to an Azure Kubernetes Service (AKS) container. You need to monitor the accuracy of each run of the model.

Solution: You configure Azure Monitor for containers. Does this meet the goal?

- A. Yes

- B. No

Answer: B

NEW QUESTION 22

......

Recommend!! Get the Full AI-100 dumps in VCE and PDF From Certshared, Welcome to Download: https://www.certshared.com/exam/AI-100/ (New 101 Q&As Version)

- A Review Of Free 70-533 exam dumps

- Abreast of the times 70-414 Exam Study Guides With New Update Exam Questions

- Microsoft 70-333 Study Guides 2021

- What Exact 70-499 dumps Is?

- Down to date Microsoft 70-410 practice test

- The Down To Date Guide To 98-361 Study Guide

- Top Tips Of Up To The Immediate Present SC-100 Test Preparation

- Top Quality MCSE 70-464 exam

- Down to date 70-480 Exam Study Guides With New Update Exam Questions

- Replace Microsoft 70-413 exam question