CCA-500 Exam Questions - Online Test

CCA-500 Premium VCE File

150 Lectures, 20 Hours

for Cloudera certification, Real Success Guaranteed with Updated . 100% PASS CCA-500 Cloudera Certified Administrator for Apache Hadoop (CCAH) exam Today!

Also have CCA-500 free dumps questions for you:

NEW QUESTION 1

You need to analyze 60,000,000 images stored in JPEG format, each of which is approximately 25 KB. Because you Hadoop cluster isn’t optimized for storing and processing many small files, you decide to do the following actions:

1. Group the individual images into a set of larger files

2. Use the set of larger files as input for a MapReduce job that processes them directly with python using Hadoop streaming.

Which data serialization system gives the flexibility to do this?

- A. CSV

- B. XML

- C. HTML

- D. Avro

- E. SequenceFiles

- F. JSON

Answer: E

Explanation: Sequence files are block-compressed and provide direct serialization and deserialization of several arbitrary data types (not just text). Sequence files can be generated as the output of other MapReduce tasks and are an efficient intermediate representation for data that is passing from one MapReduce job to anther.

NEW QUESTION 2

For each YARN job, the Hadoop framework generates task log file. Where are Hadoop task log files stored?

- A. Cached by the NodeManager managing the job containers, then written to a log directory on the NameNode

- B. Cached in the YARN container running the task, then copied into HDFS on job completion

- C. In HDFS, in the directory of the user who generates the job

- D. On the local disk of the slave mode running the task

Answer: D

NEW QUESTION 3

On a cluster running MapReduce v2 (MRv2) on YARN, a MapReduce job is given a directory of 10 plain text files as its input directory. Each file is made up of 3 HDFS blocks. How many Mappers will run?

- A. We cannot say; the number of Mappers is determined by the ResourceManager

- B. We cannot say; the number of Mappers is determined by the developer

- C. 30

- D. 3

- E. 10

- F. We cannot say; the number of mappers is determined by the ApplicationMaster

Answer: E

NEW QUESTION 4

You have installed a cluster HDFS and MapReduce version 2 (MRv2) on YARN. You have no dfs.hosts entry(ies) in your hdfs-site.xml configuration file. You configure a new worker node by setting fs.default.name in its configuration files to point to the NameNode on your cluster, and you start the DataNode daemon on that worker node. What do you have to do on the cluster to allow the worker node to join, and start sorting HDFS blocks?

- A. Without creating a dfs.hosts file or making any entries, run the commands hadoop.dfsadmin-refreshModes on the NameNode

- B. Restart the NameNode

- C. Creating a dfs.hosts file on the NameNode, add the worker Node’s name to it, then issue the command hadoop dfsadmin –refresh Nodes = on the Namenode

- D. Nothing; the worker node will automatically join the cluster when NameNode daemon is started

Answer: A

NEW QUESTION 5

A user comes to you, complaining that when she attempts to submit a Hadoop job, it fails. There is a Directory in HDFS named /data/input. The Jar is named j.jar, and the driver class is named DriverClass.

She runs the command:

Hadoop jar j.jar DriverClass /data/input/data/output The error message returned includes the line:

PriviligedActionException as:training (auth:SIMPLE) cause:org.apache.hadoop.mapreduce.lib.input.invalidInputException:

Input path does not exist: file:/data/input What is the cause of the error?

- A. The user is not authorized to run the job on the cluster

- B. The output directory already exists

- C. The name of the driver has been spelled incorrectly on the command line

- D. The directory name is misspelled in HDFS

- E. The Hadoop configuration files on the client do not point to the cluster

Answer: A

NEW QUESTION 6

Which two features does Kerberos security add to a Hadoop cluster?(Choose two)

- A. User authentication on all remote procedure calls (RPCs)

- B. Encryption for data during transfer between the Mappers and Reducers

- C. Encryption for data on disk (“at rest”)

- D. Authentication for user access to the cluster against a central server

- E. Root access to the cluster for users hdfs and mapred but non-root access for clients

Answer: AD

NEW QUESTION 7

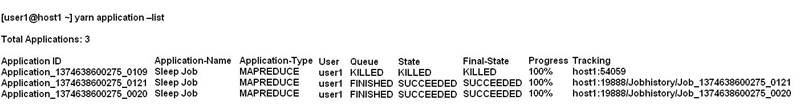

Given:

You want to clean up this list by removing jobs where the State is KILLED. What command you enter?

- A. Yarn application –refreshJobHistory

- B. Yarn application –kill application_1374638600275_0109

- C. Yarn rmadmin –refreshQueue

- D. Yarn rmadmin –kill application_1374638600275_0109

Answer: B

Explanation: Reference:http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.1-latest/bk_using-apache-hadoop/content/common_mrv2_commands.html

NEW QUESTION 8

You are running a Hadoop cluster with MapReduce version 2 (MRv2) on YARN. You consistently see that MapReduce map tasks on your cluster are running slowly because of excessive garbage collection of JVM, how do you increase JVM heap size property to 3GB to optimize performance?

- A. yarn.application.child.java.opts=-Xsx3072m

- B. yarn.application.child.java.opts=-Xmx3072m

- C. mapreduce.map.java.opts=-Xms3072m

- D. mapreduce.map.java.opts=-Xmx3072m

Answer: C

Explanation: Reference:http://hortonworks.com/blog/how-to-plan-and-configure-yarn-in-hdp-2-0/

NEW QUESTION 9

What does CDH packaging do on install to facilitate Kerberos security setup?

- A. Automatically configures permissions for log files at & MAPRED_LOG_DIR/userlogs

- B. Creates users for hdfs and mapreduce to facilitate role assignment

- C. Creates directories for temp, hdfs, and mapreduce with the correct permissions

- D. Creates a set of pre-configured Kerberos keytab files and their permissions

- E. Creates and configures your kdc with default cluster values

Answer: B

NEW QUESTION 10

A slave node in your cluster has 4 TB hard drives installed (4 x 2TB). The DataNode is configured to store HDFS blocks on all disks. You set the value of the dfs.datanode.du.reserved parameter to 100 GB. How does this alter HDFS block storage?

- A. 25GB on each hard drive may not be used to store HDFS blocks

- B. 100GB on each hard drive may not be used to store HDFS blocks

- C. All hard drives may be used to store HDFS blocks as long as at least 100 GB in total is available on the node

- D. A maximum if 100 GB on each hard drive may be used to store HDFS blocks

Answer: B

NEW QUESTION 11

You have a cluster running with a FIFO scheduler enabled. You submit a large job A to the cluster, which you expect to run for one hour. Then, you submit job B to the cluster, which you expect to run a couple of minutes only.

You submit both jobs with the same priority.

Which two best describes how FIFO Scheduler arbitrates the cluster resources for job and its tasks?(Choose two)

- A. Because there is a more than a single job on the cluster, the FIFO Scheduler will enforce a limit on the percentage of resources allocated to a particular job at any given time

- B. Tasks are scheduled on the order of their job submission

- C. The order of execution of job may vary

- D. Given job A and submitted in that order, all tasks from job A are guaranteed to finish before all tasks from job B

- E. The FIFO Scheduler will give, on average, and equal share of the cluster resources over the job lifecycle

- F. The FIFO Scheduler will pass an exception back to the client when Job B is submitted, since all slots on the cluster are use

Answer: AD

NEW QUESTION 12

Which three basic configuration parameters must you set to migrate your cluster from MapReduce 1 (MRv1) to MapReduce V2 (MRv2)?(Choose three)

- A. Configure the NodeManager to enable MapReduce services on YARN by setting the following property in yarn-site.xml:<name>yarn.nodemanager.hostname</name><value>your_nodeManager_shuffle</value>

- B. Configure the NodeManager hostname and enable node services on YARN by setting the following property in yarn-site.xml:<name>yarn.nodemanager.hostname</name><value>your_nodeManager_hostname</value>

- C. Configure a default scheduler to run on YARN by setting the following property in mapred-site.xml:<name>mapreduce.jobtracker.taskScheduler</name><Value>org.apache.hadoop.mapred.JobQueueTaskScheduler</value>

- D. Configure the number of map tasks per jon YARN by setting the following property in mapred:<name>mapreduce.job.maps</name><value>2</value>

- E. Configure the ResourceManager hostname and enable node services on YARN by setting the following property in yarn-site.xml:<name>yarn.resourcemanager.hostname</name><value>your_resourceManager_hostname</value>

- F. Configure MapReduce as a Framework running on YARN by setting the following property in mapred-site.xml:<name>mapreduce.framework.name</name><value>yarn</value>

Answer: AEF

NEW QUESTION 13

Each node in your Hadoop cluster, running YARN, has 64GB memory and 24 cores. Your yarn.site.xml has the following configuration:

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>32768</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>12</value>

</property>

You want YARN to launch no more than 16 containers per node. What should you do?

- A. Modify yarn-site.xml with the following property:<name>yarn.scheduler.minimum-allocation-mb</name><value>2048</value>

- B. Modify yarn-sites.xml with the following property:<name>yarn.scheduler.minimum-allocation-mb</name><value>4096</value>

- C. Modify yarn-site.xml with the following property:<name>yarn.nodemanager.resource.cpu-vccores</name>

- D. No action is needed: YARN’s dynamic resource allocation automatically optimizes the node memory and cores

Answer: A

NEW QUESTION 14

Which scheduler would you deploy to ensure that your cluster allows short jobs to finish within a reasonable time without starting long-running jobs?

- A. Complexity Fair Scheduler (CFS)

- B. Capacity Scheduler

- C. Fair Scheduler

- D. FIFO Scheduler

Answer: C

Explanation: Reference:http://hadoop.apache.org/docs/r1.2.1/fair_scheduler.html

NEW QUESTION 15

Identify two features/issues that YARN is designated to address:(Choose two)

- A. Standardize on a single MapReduce API

- B. Single point of failure in the NameNode

- C. Reduce complexity of the MapReduce APIs

- D. Resource pressure on the JobTracker

- E. Ability to run framework other than MapReduce, such as MPI

- F. HDFS latency

Answer: DE

Explanation: Reference:http://www.revelytix.com/?q=content/hadoop-ecosystem(YARN, first para)

NEW QUESTION 16

Your cluster implements HDFS High Availability (HA). Your two NameNodes are named nn01 and nn02. What occurs when you execute the command: hdfs haadmin –failover nn01 nn02?

- A. nn02 is fenced, and nn01 becomes the active NameNode

- B. nn01 is fenced, and nn02 becomes the active NameNode

- C. nn01 becomes the standby NameNode and nn02 becomes the active NameNode

- D. nn02 becomes the standby NameNode and nn01 becomes the active NameNode

Answer: B

Explanation: failover – initiate a failover between two NameNodes

This subcommand causes a failover from the first provided NameNode to the second. If the first

NameNode is in the Standby state, this command simply transitions the second to the Active statewithout error. If the first NameNode is in the Active state, an attempt will be made to gracefullytransition it to the Standby state. If this fails, the fencing methods (as configured bydfs.ha.fencing.methods) will be attempted in order until one of the methods succeeds. Only afterthis process will the second NameNode be transitioned to the Active state. If no fencing methodsucceeds, the second NameNode will not be transitioned to the Active state, and an error will bereturned.

P.S. Surepassexam now are offering 100% pass ensure CCA-500 dumps! All CCA-500 exam questions have been updated with correct answers: https://www.surepassexam.com/CCA-500-exam-dumps.html (60 New Questions)